ReInc: Scaling Training of Dynamic Graph Neural Networks

Published at

arXiv

2025

Abstract

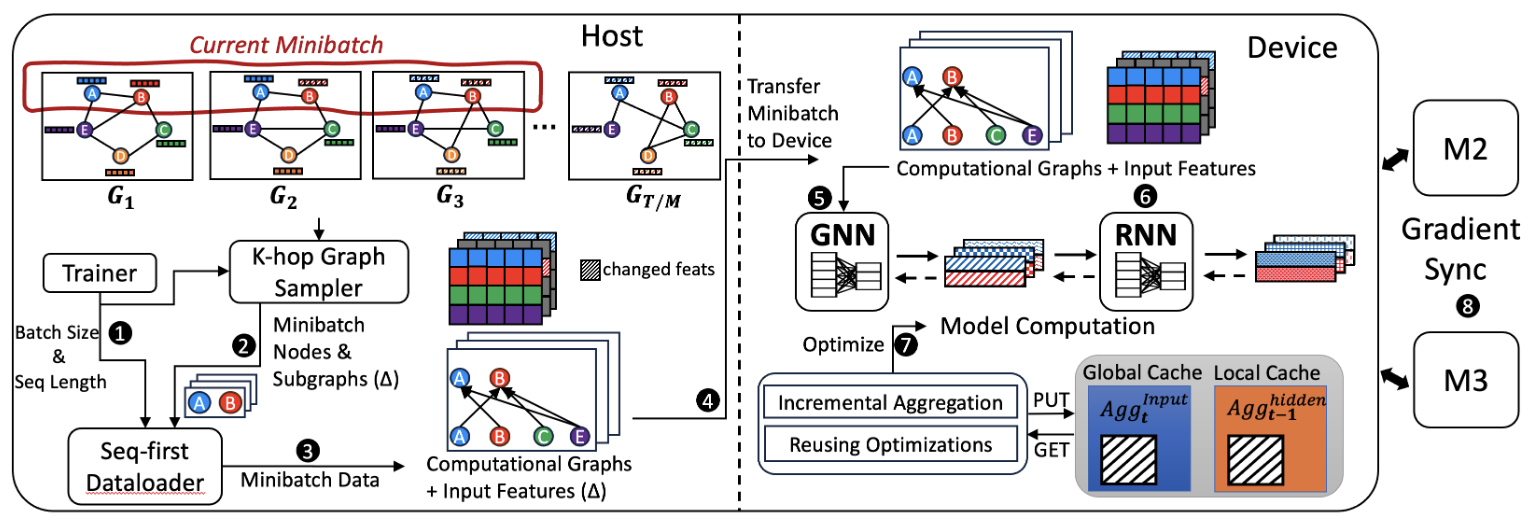

Dynamic Graph Neural Networks (DGNNs) have gained

widespread attention due to their applicability in diverse do-

mains such as traffic network prediction, epidemiological

forecasting, and social network analysis. In this paper, we

present REINC, a system designed to enable efficient and

scalable training of DGNNs on large-scale graphs. REINC

introduces key innovations that capitalize on the unique com-

bination of Graph Neural Networks (GNNs) and Recurrent

Neural Networks (RNNs) inherent in DGNNs. By reusing

intermediate results and incrementally computing aggrega-

tions across consecutive graph snapshots, REINC significantly

enhances computational efficiency. To support these optimiza-

tions, REINC incorporates a novel two-level caching mecha-

nism with a specialized caching policy aligned to the DGNN

execution workflow. Additionally, REINC addresses the chal-

lenges of managing structural and temporal dependencies in

dynamic graphs through a new distributed training strategy.

This approach eliminates communication overheads associ-

ated with accessing remote features and redistributing inter-

mediate results. Experimental results demonstrate that REINC

achieves up to an order of magnitude speedup compared to

state-of-the-art frameworks, tested across various dynamic

GNN architectures and real-world graph datasets.